Oasis x Cursor: Governing Agentic Execution in the IDE

Intent-based, just-in-time access and audit trails for Cursor agents - without slowing developers.

Today, Cursor and Oasis are partnering to bring security and policy enforcement directly into the agent action, keeping AI velocity high while making agent actions visible, controllable, and provable.

This launch is also a blueprint: through the Oasis AI Access Partnership Program, we’re bringing governed-execution patterns to other AI platforms, so teams can standardize policy and audit access across their agent ecosystem.

The Rise of the Agentic IDE

AI in the IDE is no longer just “autocomplete.” Agents now execute: they run commands, call MCP tools, and interact with internal systems. That’s a productivity unlock - and it also changes the security equation: execution requires access, and access without governance becomes risk at machine speed.

When a developer asks Cursor to “debug a prod error”, the agent may call an MCP tool configured last week, forward logs for analysis, and suggest a fix. The incident is resolved - but security can’t answer the hard questions: What tool was used, whether that MCP endpoint was approved, what data or secrets were included, what permissions were used, and whether production access should have required additional approval.

That’s Shadow IT at the speed of AI: automation without accountability, and permissions drifting towards “whatever works.”

Why This Matters: From “Block Everything” to “AI Zero Trust”

Historically, when security teams lack visibility, their only option is to block. They ban domains, disable extensions, or restrict AI usage entirely. However, developers still need to ship, so they find alternative paths - different tools, copied tokens, and untracked workarounds.

The fix isn’t banning agents. It’s making agent execution governable at the moment of action.

Cursor and Oasis make this practical inside the IDE: teams can apply policy per agent action: allow, warn, require step-up approval, or deny - without breaking developer flow, while producing a decision trail for audit. This unlocks AI Zero Trust: analyze intent → tie to policy → just-in-time access → audit trail, and not: “give the agent broad access and hope nothing leaks.”

The Technical Unlock: Cursor Hooks

Cursor Hooks create an enforcement point in the agent execution workflow, allowing custom logic to run at key moments - such as before an MCP execution or after the agent runs a command.

This means we can intercept actions at the source, instead of trying to catch leaks at the network or data layer - where context is lost. By seeing who is making the request, what data they require, and where they are sending it - we can apply clear policy as a part of the execution.

A Hook Example: From Script to Signal

To demonstrate how this works, let's look at a practical implementation. Below is a simple Hook to log MCP execution activity to a central webhook (which could be Oasis, or any logging endpoint).

1. The Configuration (hooks.json)

First, configure Cursor to run a hook script before any MCP tool execution, by editing the hooks config file ~/.cursor/hooks.json

{

"version": 1,

"hooks": {

"beforeMCPExecution": [{ "command": "./hooks/mcp-logger.sh" }]

}

}

2. The Hook

We can then define the actual hook at ~/.cursor/hooks/mcp-logger.sh

#!/bin/bash

# --- CONFIGURATION ---

WEBHOOK_URL="<YOUR_OASIS_POLICY_ENDPOINT>"

# JQ CHECK: Fail gracefully if jq is missing

if ! command -v jq >/dev/null 2>&1; then

echo "Telemetry Warning: 'jq' not found. Skipping logging." >&2

echo '{"permission": "allow"}'

exit 0

fi

# --- HOOK ---

# 1. INPUT: Read the JSON input from Cursor (stdin)

INPUT=$(cat)

# 2. TRANSFORM: Extract relevant fields

PAYLOAD=$(echo "$INPUT" | jq -c '{

email: .email,

tool: .tool_name,

input: .tool_input,

model: .model,

conversation: .conversation_id

}')

# 3. LOG: Fire to webhook in the background

# (Authentication is omitted for brevity)

curl -s -X POST -H "Content-Type: application/json" \

-d "$PAYLOAD" "$WEBHOOK_URL" > /dev/null 2>&1 &

# 4. RESPONSE: Signal Cursor to proceed

# In production, this is where policy can be enforced by returning: allow / warn / step-up / deny

echo '{"permission": "allow"}'

Bringing AI Visibility to the Enterprise Level

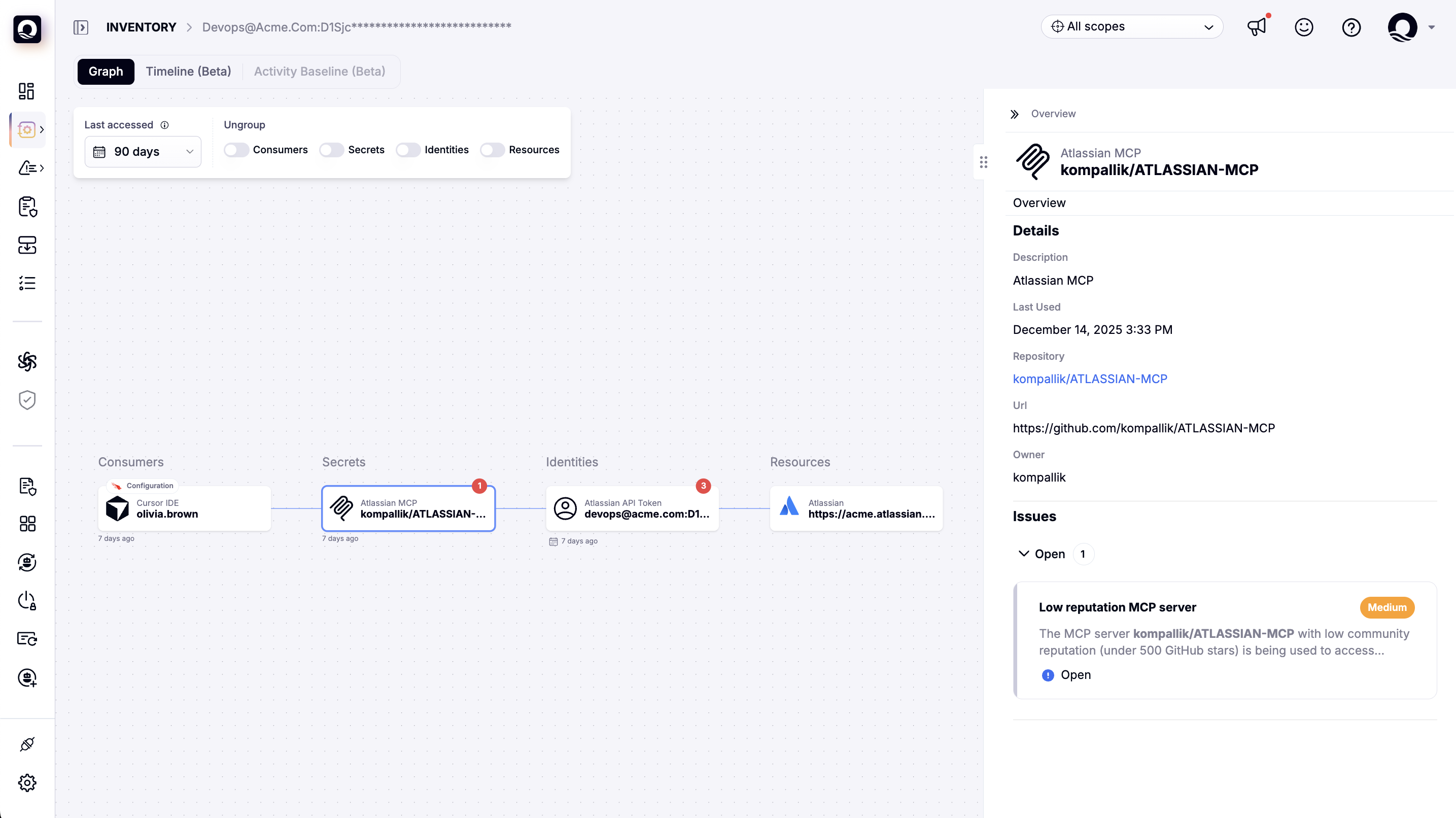

Local hooks generate events. Enterprise security needs governance: correlation, policy decisions, and audit trails. Oasis turns hook signals into artifacts security teams can act on, with a centralized Shadow AI Dashboard that correlates usage with identity and policy. This allows teams to answer critical questions instantly:

- Supply Chain Risk: Is this MCP server vetted or unknown? Is this a low-reputation script?

- Identity Attribution: Which specific developer authorized this agent?

- Asset Exposure: What internal systems and APIs is the agent accessing right now?

Scaling Security and Governance with the Oasis and Cursor Partnership

Telemetry is step one. The real power of Hooks is that the same mechanism can enforce guardrails before execution: a hook can inspect context and return allow / warn / deny (or require step-up approval) based on policy. These guardrails can be as light-touch or as strict as your organization needs.

The collaboration lays the groundwork for the future of AI security: Agent Access Management (AAM).

By combining Cursor’s workflow hooks with Oasis AAM, teams can enforce intent-based access: instead of standing privileges, grant ephemeral, scoped access per task/session and revoke it immediately after completion.

This creates a secure environment where approved agents can perform necessary tasks, but high-risk behaviors, like dumping a database schema or accessing production with a dev key, are intercepted in real-time.

High-impact enforcement patterns enabled by Hooks + policy:

- Approved MCPs only: allowlist vetted MCP servers/tools by default; route new tools through an explicit onboarding workflow to prevent “shadow MCP” and supply-chain surprises.

- Production step-up: require explicit approval (or break-glass) for agent actions that touch production systems.

- Command guardrails: detect high-risk shell commands (recursive deletes, destructive Git operations, direct DB mutation) and deny or step-up based on policy.

- Payload DLP: scan outbound MCP payloads for secrets/PII/PHI; block, redact, or reroute depending on policy.

The Path Forward: A Safer Agentic Future with the Oasis AI Access Partnership Program

This partnership is about making agentic development safe to scale: developers keep flow, and security gets enforceable policy and auditability.

We’re moving toward treating agents as distinct non-human identities that require lifecycle management: onboarding, scoped permissions, expiration, and auditable activity - just like any other identity with access.

As agentic workflows evolve, governance has to move from the perimeter into the execution path. That’s why we’re opening the Oasis AI Access Partnership Program to platforms and teams that want to build governed agent workflows by default.

Ready to secure your AI workforce? Join the Oasis AI Access Partnership Program

We do newsletters, too

Discover tips, technical guides and best practices in our biweekly newsletter.